|

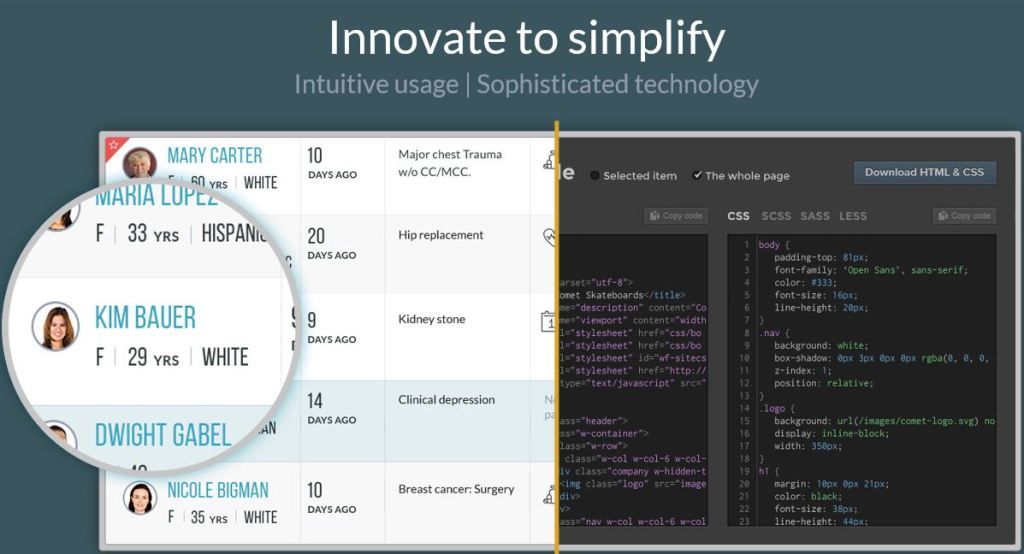

| An EHR screenshot... but where is that diagnosis of diabetes? |

Years ago, if you were elderly, had diabetes, high blood pressure, low back pain, needed a yearly flu shot and came to see this electronic health record-enabled physician (now with the nom de plume "Disease Management Care Blog"), you would have had your diabetes, high blood pressure and low back pain reassessed, you would have been given a flu shot and, for good measure, the DMCB would have tossed in a discussion about the unpleasantness of getting screened for cancer. After the indignity of your physical exam, the DMCB would have typed its clinic notes into the EHR. Then the DMCB would have [click!] opened a new window,[click!] and "processed" your clinic encounter by selecting a [click!] "principal diagnosis" and some [click!] "secondary diagnoses," set a [click!] "level of care" and then [click!] "closed" the record.

And after all that, it's very possible that, thanks multiple diagnoses, entry fields and the press of time that the DMCB would have never selected [or clicked!] "diabetes." In other words, the diagnosis of diabetes would have been an electronic tree in the forest that no one would have heard falling.

It turns out that this is another shortcoming of the EHR that fails to get mentioned by its naive paladins. While these weenies would have you believe that physicians can use this technology to magically retrieve any information on any sick patient around the world, the truth is that these systems are notoriously reliant on old fashioned human behavior. Relying on standard diagnosis coding to identify every patient with every condition remains notoriously inaccurate.

That's why work-arounds have become necessary. An interesting one is the Centers for Disease Control's "BioSense." It uses the words or text that are entered into an electronic record's chief complaint "field" in emergency rooms and clinics. Combinations of certain keywords are consistently associated, for example, with influenza and can even act as an early warning system that heralds an outbreak of the disease. Using text is also the logic behind Google's ability to gauge the presence of disease among users of its search engine.

With that background on the EHR, its coding travails and influenza, members of the population health management community may want to pay attention to this Mayo Clinic Study was recently published in the Annals of Internal Medicine.

"Why?" you ask? Read on.

Persons who had been laboratory tested for the presence of influenza comprised the study population. 1455 persons had the virus while 15,788 did not. Of the 1455 persons with influenza, 1203 had an encounter recorded by a provider at the same time as the test. The encounters were the typical "free text" typed notes that were composed by providers and included a history (including patient symptoms) and a physical examination (such as the presence of a fever or a red throat). 1455 patients with a "negative" test (i.e., no virus) were selected as control patients and of these, 905 had a recorded typed encounter.

The providers' free text encounter records were then scanned using a "Multithreaded Clinical Vocabulary Server" (MCVS) system. As the DMCB understands it, this looks for certain key words, terms and phrases in the providers' notes as well as associated x-ray reports and lab tests that, based on prior studies, seem to be associated with the presence of the influenza virus. The extract (dubbed a "synthetic derivative") was then downloaded into "SNOMED CT," which acts as a medical dictionary of medical concepts that can be used in regression algorithms to predict the presence or absence of influenza. That prediction ("the patient has influenza") was compared to the gold standard of the viral testing ("influenza virus really found").

How did MCVS do vs. the lab? By combining certain terms in the records ("fever" and "cough" for example) and excluding others (an abnormal chest x-ray, which is unusual in viral influenza) the authors found, compared to viral testing, a remarkable degree of accuracy: using a receiver-operator characteristic curve that reconciles testing accuracy over a range of assumptions, the rate for MCVS exceeded 90%. In other words, the description of the illness spotted the disease prior to any lab testing.

While the Annals paper focused on the ability of MCVS to act as an early biosurveillance warning system that can spot infectious disease outbreaks early and and accurately, the DMCB was intrigued by the implications for the population health management community and the care of persons with chronic disease.

Here's why:

1. With an accuracy rate of more than 90% for spotting persons with influenza, it's possible that systems like MCVS will likewise be able to identify those with known diabetes, COPD or heart disease who fail to have their condition officially tagged and recorded as a diagnosis. With systems like MCVS, health management providers will be able to overcome the shortcomings of the EHR and obtain a complete picture of their populations' disease burden

2. What's more, systems like MCVS may, on the basis of a scan of multiple encounter notes about risk factors (for example thirst, excessive weight and a suggestive family history), be also able to spot persons with undiagnosed conditions. Furthermore, other factors could be used to prospectively identify those persons at greatest risk for future complications, such as an avoidable hospitalization. In other words, getting access to the medical records would be another step forward in the still evolving science of predictive modeling.

3. The DMCB also wonders if systems like MCVS will eventually be used to define and submit the diagnosis codes for the provider. Not only would this unburden the physicians from what is an administrative hassle, it'd probably be ultimately more accurate. Combine that with the detail of ICD-10 coding (assuming we can actually implement it), and we may finally be on the cusp of fully understanding the care needs of populations and be that much closer to collecting on the still-unfulfilled promise of the EHR.

2 comments:

What's the title of the journal article discussing the Mayo Clinic study? Thanks.

Here's the link:

http://annals.org/article.aspx?articleid=1033257

Comparison of Natural Language Processing Biosurveillance Methods for Identifying Influenza From Encounter Notes

Post a Comment